WEEK 5

Behavioral Prototype

Week five I worked in a group of four to make a behavioral prototype that allowed us to test it using Wizard of Oz methodologies. We were given the option of four different platforms and we chose to create a application for a voice-operated assistant. We then used google docs, an amazon echo dot, and amazon echo’s accompanying app to bring our protype to life. Some assignment constraints included creating open ended exploration of simple tasks for users through voice commands, exploration of users' tolerance for recognition errors, and not using an existing voice assistant.

The behavioral prototype that my group created is a voice assistant application that would help you plan your bus route. The prototype was designed to operate like an Amazon Echo and was built on the Amazon Alexa app. Like an Alexa, users are able to use a wake word, in our case Oz, to start interacting with the device and ask it for information. Oz is designed to be able to inform the user of bus schedules, which bus route is ideal for their destination, and set reminders to help the user catch their bus.

Once we had an idea of what exactly we were going to prototype we “built” it in the Alexa app and recruited 5 participant to interact with it as part of an evaluation session. We recruited participants that were unfamiliar with Wizard of Oz methodologies through personal connections. Within the group of people that were creating this behavioral prototype and running the user tests there were four roles. We had a facilitator running the user testing sessions, two wizards choosing the responses and playing the part of the voice assistant, a scribe capturing the users behavior, and a documentarian, recording the user testing sessions. During the user testing sessions the documentation also acted as a wizard to ensure a smooth user test.

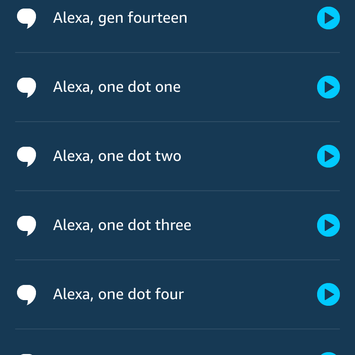

Because the focus of this assignment was on wizard of oz methods my group did not take the time to physically code this type off voice assistant application. Instead, we used an Amazon Echo Dot as a speaker and played preset responses to user input during user testing. As a group we had gone through and created three sineros for our participant to test the different features of the prototype. We had then created responses to most any questions that the user may ask of OZ, our voice assistant, and taught the echo dot to say each phrase. We were able to get the echo dot to give the exact response we wanted by creating custom actions for each response in the Alexa app that could then be executed at the push of a button. See the below photos for example response and how they were executed within the Alexa app.

The user testing sessions were ran with all four group member in the room with the participant. Due to my familiarity with the Alexa platform I was one of the two wizards controlling the prototype behind the senses. During a 2 hour window we conducted 5 user tests, most following the same format. The participant would be read and given a scenario to then complete using Oz. Once the participant had said the wake word I would activate an indicator light to give the user the impression that the device was listening. Another wizard would then listen to the participant question and indicate to myself what response to play on the device. This was repeated for three scenarios and the participants were then asked a few questions and the real wizardry was revealed. Playing the preset responses for the participant allowed for us to simulate an interactive experience between a person and a device while allowing for real time modification.

The user tests showed that the prototype was relatively believable with a majority of participant giving the believability of the prototype a 4 out of 5. However, when asked if they would use this product most participants stated either no need for the product or a fear of voice assistants. During the user testing the prototype excelled in predicting what information the user would ask for. Out of the 37 responses given by the wizard during the 5 participants only 2 were unpredicted and required the “I’m sorry, we currently don’t support this feature” voice assistant response. The user testing also showed that the wizards running the prototype need to be prepared for anything. It was common during our user tests for the wizard to have technical issues causing large delays between the users question and the voice assistants response. The facilitator was frequently having to have the participant repeating their questions to give the wizard more time and playing it off as a technical glitch.

The 5 user testing session showed that the prototype was effective in communicating a large, robust amount of information. During the post test interview a majority of participants commented on the length of the voice assistant’s responses to their requests and how it was more information then voice assistants they had interacted with in the past. This was a positive for the overall design and prototype, but a negative in regards to the believability of the wizard of oz testing method. The videos recorded by the documentarian during the user testing session were compiled into a two minute clip which can be seen below. This clip was played along with other during an in-class critique session. During this critique session my peers commented on how it was difficult to hear the voice assistant speaking in the video. In regards to the user testing session there was a discussion regarding how the user know the task is complete. We did have slight trouble knowing when the user was done with the task and could have used the feedback from class. This feedback was that when designing the tasks for the user testing to make the tasks dependent on each other to only allow progression if the task has been complete.